Best in class deployments with best in class GPU’s

Introducing next-gen AI capabilities that combine latest orchestration and hardware innovations - made in Europe

Get in touch

Hardware meets Orchestration

Arkane Cloud and Codesphere are partnering to provide the best of two worlds in a new European datacenter:

Innovative hardware & best in class deployment orchestration and development workflows

Get in touch

Unparallelled distriubtion

Offering unparalleled experience in distribution, Arkane will be the facilitator running the datacenter.

Go to Arkane

Best-in-class Orchestration

Codesphere makes deploying easy, offering everything from cloud orchestration to path-based routing.

Go to Codesphere

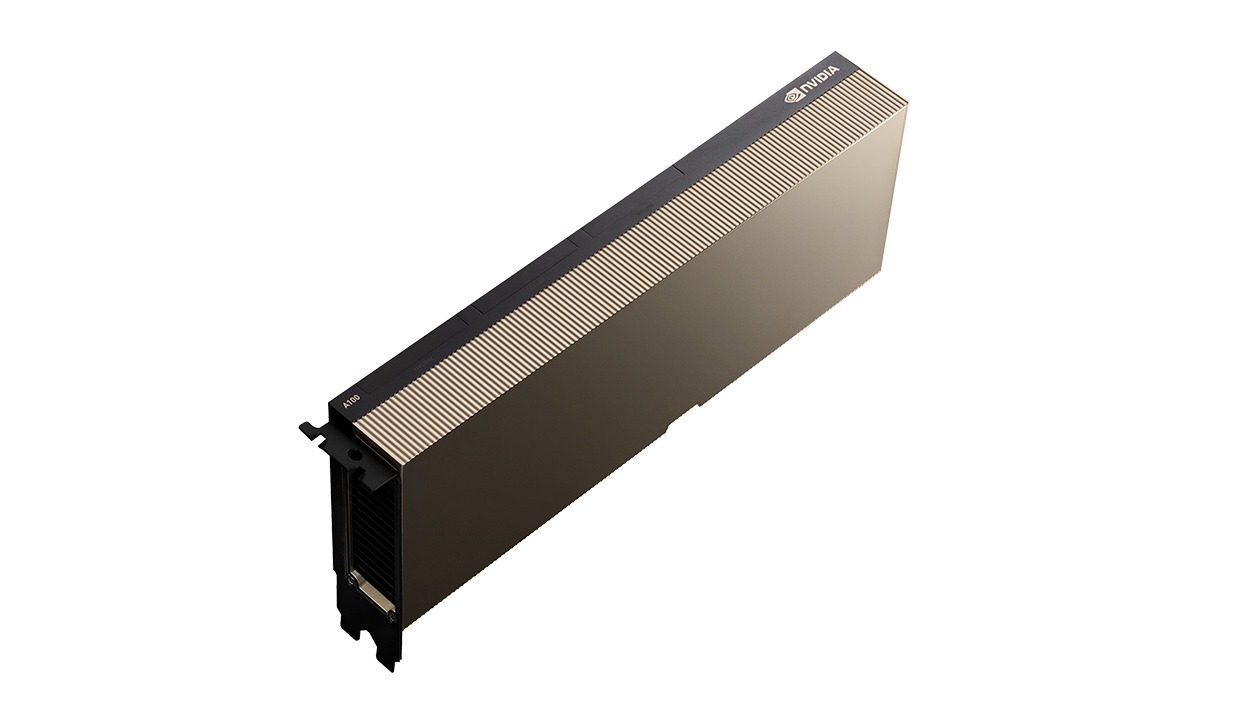

State of the art hardware

GIGABYTE's next-gen GPU architecture lays the foundation for a state-of-the-art datacenter.

Go to GIGABYTEUnlock the Benefits of Self-Hosted Cloud GPU Solutions

Get rid of the challenges of self-hosting GPU and stay ahead of the competition.

Why self-hosted

Data compliance

Control over sensitive data

Control over infrastructure

Cheaper at scale

Challenges

Complex to set up and maintain

Inefficient auto scaling due to slow startups leading to overprovisioning

Dependency on Hyperscalers for good DX

| How we solve complexity challenges | How we solve scaling challenges |

|---|---|

|

Zero-Config Cloud provisioning handled Dev self-service, developers can build and deploy GPU powered projects with just a few clicks Easyily up- / downgrade plans without additional cost or cost overhead |

Patented cold-start technology → no need for overprovisioning Easy up- and downscaling Save 90% for low-traffic, high-resource applications Make infrastructure more efficient by improving packing density |

State of the art right

from the heart of the EU

Located in Lyon, France, the Cloud GPU platform delivers high performance with unique benefits:

GDPR compliant

Low latency for european users

Data never leaves europe

Get in touch

NVIDIA A5000

We currently offer dedicated NVIDIA A5000 GPUs to power your projects.

Each dedicated unit comes with the following specs:

- 8vCPU

- 32GB memory

- 200 GB disk storage

- Clusters of x2, x4 and x8 available

More on the way

We are positioned to provide the latest hardware as soon as it becomes available.

Currently testing hardware like:

- AMD MI 300 Series

- H200

- GH200

- GB200

We are handling the complex DevOps for you in the background, eliminating context switches and bottlenecks without any vendor lock-in.

Get in touch

Decentralized

Organization

Parallelized

Feedback Cycles

Tech-Agnostic

Choice of Tools

Managed

Services

AI

Models

No

Code

Custom

Code

Developer

Self-Services

Host your custom LLM in under 2 minutes.

Leverage the power of GPU to host any LLM using Llama.cpp, Ollama, Langchain, or any other provider that runs on Linux.

Go from zero to a functioning LLM including OpenAI API compatible in less than two minutes.

Let's get in touch!

Feel free to reach out to us directly or read on of our whitepapers available below!

Want to learn more?

We offer different whitepapers on relevant topics. Learn more on navigating LLMs here or browse our other whitepapers.

Navigating the LLM deployment dilemma

Whitepaper on rising GPU costs and innovative solutions. Why self hosting & modern cloud orchestration are the way to go.

Download